Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is a fully managed Kubernetes service offered by Microsoft Azure that simplifies the deployment, management and scaling of containerized applications.

AKS enables you to focus on building and running your applications while abstracting away many of the complexities of managing Kubernetes infrastructure. By leveraging AKS, you can achieve faster time-to-market, reduce infrastructure costs, and improve the resiliency and scalability of your applications.

How to Setup Azure Kubernetes Service

1. Create a resource group

AKS requires an Azure resource group to manage all the resources associated with your cluster. You can create a new resource group using the Azure portal, Azure CLI, or PowerShell.

2. Create AKS Cluster

When you create an AKS cluster using the Azure Portal, Azure CLI or Azure PowerShell, Azure automatically provisions and configures a Kubernetes control plane and worker nodes on your behalf. The control plane is responsible for managing the state of your Kubernetes cluster and scheduling workloads to run on your worker nodes.

You can customize the configuration of your AKS cluster by specifying options such as

Version of Kubernetes, number and size of worker nodes.

Network configuration for your cluster, including the virtual network, subnets and IP address ranges.

Setup authentication mechanism to your cluster including Azure Active Directory, Kubernetes RBAC or both.

Create a service principal to allow AKS to interact with other Azure services, such as Azure Container Registry or Azure File Storage.

3. Configure Cluster Access

After creating the cluster, you'll need to configure access to the Kubernetes API server. You can do this using the Kubernetes command-line tool (kubectl) by specifying the cluster name, resource group and credentials.

4. Create Kubernetes Manifest

After setting up AKS cluster, create kubernetes manifest files, you can use any text editor of your choice such as Notepad++, Visual Studio Code or Sublime Text. A Kubernetes manifest file is a YAML file that describes the desired state of your application, including the containers that make up your application, the resources that they need and the networking configuration as shown below

apiVersion: v1 kind: Pod metadata: name: my-app spec: containers: - name: my-container image: my-registry/my-image:latest command: ["./my-app"] resources: limits: cpu: "1" memory: "512Mi" requests: cpu: "0.5" memory: "256Mi"selector: - name: my-appports: - name: http port:80 targetPort: 8080 type: "LoadBalancer 5. Deploying Containerized Applications

Once you have created your kubernetes manifests, you can use Kubernetes tools such as kubectl to apply them to your AKS cluster as shown below. Kubernetes will then schedule your application containers to run on your worker nodes, based on the resource requirements and availability of your nodes.

kubectl apply -f my-manifest.yaml6. Scaling Applications

AKS enables you to scale your applications up or down by increasing or decreasing the number of replicas of your containers. You can do this manually or automatically based on metrics such as CPU or memory utilization.

AKS also supports node scaling, which enables you to automatically add or remove worker nodes from your cluster based on demand. This can help you optimize your resource utilization and reduce costs by scaling your infrastructure up or down as needed.

7. Monitoring Applications

AKS provides built-in monitoring and logging capabilities through integration with Azure Monitor. This enables you to monitor the health and performance of your application and diagnose issues if they arise.

Azure Monitor can collect metrics such as CPU utilization, memory usage, and network traffic for your AKS cluster and application workloads. You can use these metrics to create custom dashboards and alerts that notify you of any issues or anomalies in your environment.

8. Manage Cluster

AKS provides a variety of management capabilities, such as automatic upgrades to the Kubernetes version, node auto-scaling, and automatic repair of failed nodes. These capabilities help you simplify the operation of your AKS cluster and reduce the amount of manual maintenance required.

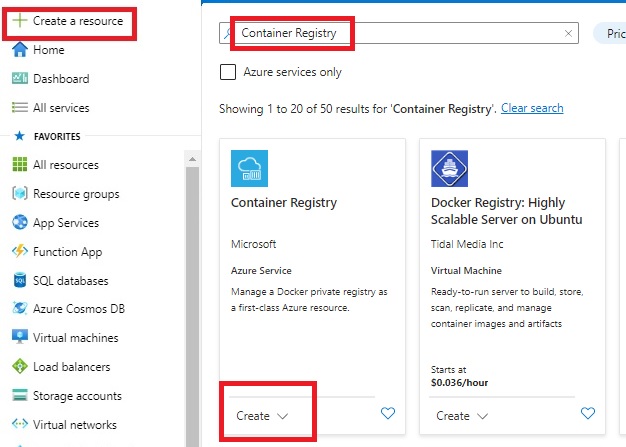

AKS also integrates with other Azure services such as Azure Container Registry (ACR) for storing and managing container images, and Azure DevOps for continuous integration and deployment of your containerized applications. These integrations enable you to streamline your application development and deployment workflows, and help you achieve faster time-to-market.

Best Practices for Azure Kubernetes Service

Some best practices needs to follow while using Azure Kubernetes Service(AKS)

1. Use RBAC to control access to your AKS cluster and resources. Create and assign roles and role bindings to ensure that only authorized users and services have access to your cluster.

2. Use namespaces to organize your AKS cluster resources, and use RBAC to control access to these namespaces.

3. Use resource quotas to limit the amount of CPU, memory, and other resources that can be used by your AKS cluster and applications.

4. Use node pools to optimize resource utilization by creating separate pools of nodes with different resource characteristics, and assigning pods to the appropriate node pool based on their resource requirements.

5. Use Azure managed identities for services to provide secure access to Azure resources from within your AKS cluster, without the need for secrets or credentials.

6. Use a container registry such as Azure Container Registry to store and manage your container images, and use RBAC to control access to the registry.

7. Use health probes and liveness probes to monitor the health of your application and automatically restart unhealthy containers.

8. Use HPA to automatically scale the number of pods in your deployment based on resource utilization and application demand.

9. Use network policies to control network traffic between pods and services in your AKS cluster, and limit access to only the necessary services and ports.

10. Use Azure Monitor and Azure Log Analytics to monitor and log your AKS cluster and applications, and use this data to troubleshoot issues and optimize performance.

How to choose among AKS, Docker Swarm and Amazon ECS?

Choosing among AKS (Azure Kubernetes Service), Docker Swarm, and Amazon ECS (Elastic Container Service) depends on various factors such as the requirements of your application, the size and complexity of your environment, and the expertise of your team. Here are some considerations to help you make a decision

Architecture

AKS is based on Kubernetes, while Docker Swarm and Amazon ECS have their own proprietary architectures. If you are already familiar with Kubernetes, AKS may be the best choice for you. If you prefer a simpler, more streamlined architecture, Docker Swarm or Amazon ECS may be a better fit.

Scalability

If scalability is a primary concern, all three platforms are capable of scaling horizontally and vertically. However, Kubernetes and AKS have more built-in features for auto-scaling and load balancing than Docker Swarm and Amazon ECS.

Flexibility

Kubernetes and AKS offer a wider range of configuration options and are more customizable than Docker Swarm and Amazon ECS. If you need more flexibility in how you configure and manage your container environment, Kubernetes and AKS may be the best choice for you.

Integration With Other Services

If you are already using other services in the Azure, Docker, or Amazon ecosystems, it may be easier to integrate with AKS, Docker Swarm, or Amazon ECS respectively. Consider which platform has the best integration with the services you are currently using.

Multi-cloud support

If you plan to run your containers across multiple cloud providers, Kubernetes and AKS may be the best choice because of their multi-cloud support. Docker Swarm and Amazon ECS are designed to work best within their respective cloud ecosystems.

Skill level

Consider the skill level of your team. If your team is already familiar with Docker, Docker Swarm may be the easiest to use. If your team has experience with Kubernetes, AKS may be the best choice. If your team is new to container orchestration, Amazon ECS may be the easiest to learn.

Ultimately, the best choice depends on your specific needs and requirements. It may be helpful to try out each platform before making a decision.

Comments

Post a Comment